1.0 Introduction

Welcome to Polarr's SDK, in here you will find thorough documentation on how to execute our framework to build beautiful creations.

Download

git clone //swift repo

1.1 Use Cases

Computational photography is a growing field. Use cases are abundant, here are some we found necessary and actionable with our current SDK platform.

- Passport photo creation.

- Finding old friends and acquaintances in a camera roll, defining closeness.

- Auto emoji reaction suggestion for photos.

- Auto gallery formed from best photos, auto cropped, with 3D/depth effects.

- Filter videos in a camera roll by mood.

- Receipt and document album cleaning.

1.2 Getting Started

After importing PolarrKit you will be able to use the following models.

| Model | Output |

|---|---|

SmartCrop |

Normalized coordinates to crop parent image. |

Segmentation |

Masked Image of a provided subject matter. |

SimilarityGrouping |

Group photos to a label. |

AestheticRanking |

Aesthetic scoring of an image. |

Depth |

Generate a depth map from any photo. |

import PolarrKit

2.0 SmartCrop

Our SmartCrop model can take two simple parameters an image and aspect ratio to constrain the results. If no aspect ratio is provided the best scored crop possible will be chosen.

Retrieving the best scored crop:

let sourceImage : UIImage = UIImage(contentsOfFile: "/path/to/image")

if let crop = SmartCrop(on: sourceImage) {

//normalized crop coordinates

}

Constraining the results towards crops that have an aspect ratio of 1.0, (i.e. squares).

if let crop = SmartCrop(on: sourceImage, aspectRatio: 1.0) {

//normalized crop coordinates

}

2.1 Inputs

| Paramaters | Type |

|---|---|

on: |

UIImage or CVPixelBuffer |

aspectRatio: |

Double (Optional) |

2.2 Outputs

| Output | Type |

|---|---|

SmartCrop.Output |

CGRect |

BEFORE:

AFTER:

3.0 Segmentation

Our Segmentation model can take two simple parameters an image and aspect ratio to constrain the results. If no aspect ratio is provided the best scored crop possible will be chosen.

If no label is provided, the label person is automatically.

Retrieving the segmented result:

let sourceImage : UIImage = UIImage(contentsOfFile: "/path/to/image")

if let segmentedDog = Segmentation(on: sourceImage, label: "dog") {

//If there is a visible dog, it will be segmented out into a new UIImage object.

}

Supported labels by our model.

["background", "person", "bicycle", "car", "motorcycle", "airplane", "bus", "train", "truck", "boat", "traffic light", "fire hydrant", "stop sign", "parking meter", "bench", "bird", "cat", "dog", "horse", "sheep", "cow", "elephant", "bear", "zebra", "giraffe", "backpack", "umbrella", "handbag", "tie", "suitcase", "frisbee", "skis", "snowboard", "sports ball", "kite", "baseball bat", "baseball glove", "skateboard", "surfboard", "tennis racket", "bottle", "wine glass", "cup", "fork", "knife", "spoon", "bowl", "banana", "apple", "sandwich", "orange", "broccoli", "carrot", "hot dog", "pizza", "donut", "cake", "chair", "couch", "potted plant", "bed", "dining table", "toilet", "tv", "laptop", "mouse", "remote", "keyboard", "cell phone", "microwave", "oven", "toaster", "sink", "refrigerator", "book", "clock", "vase", "scissors", "teddy bear", "hair drier", "toothbrush"]

3.1 Inputs

| Parameters | Type |

|---|---|

on: |

UIImage |

label: |

String |

3.2 Outputs

| Output | Type |

|---|---|

TextureToImage.Output |

UIImage |

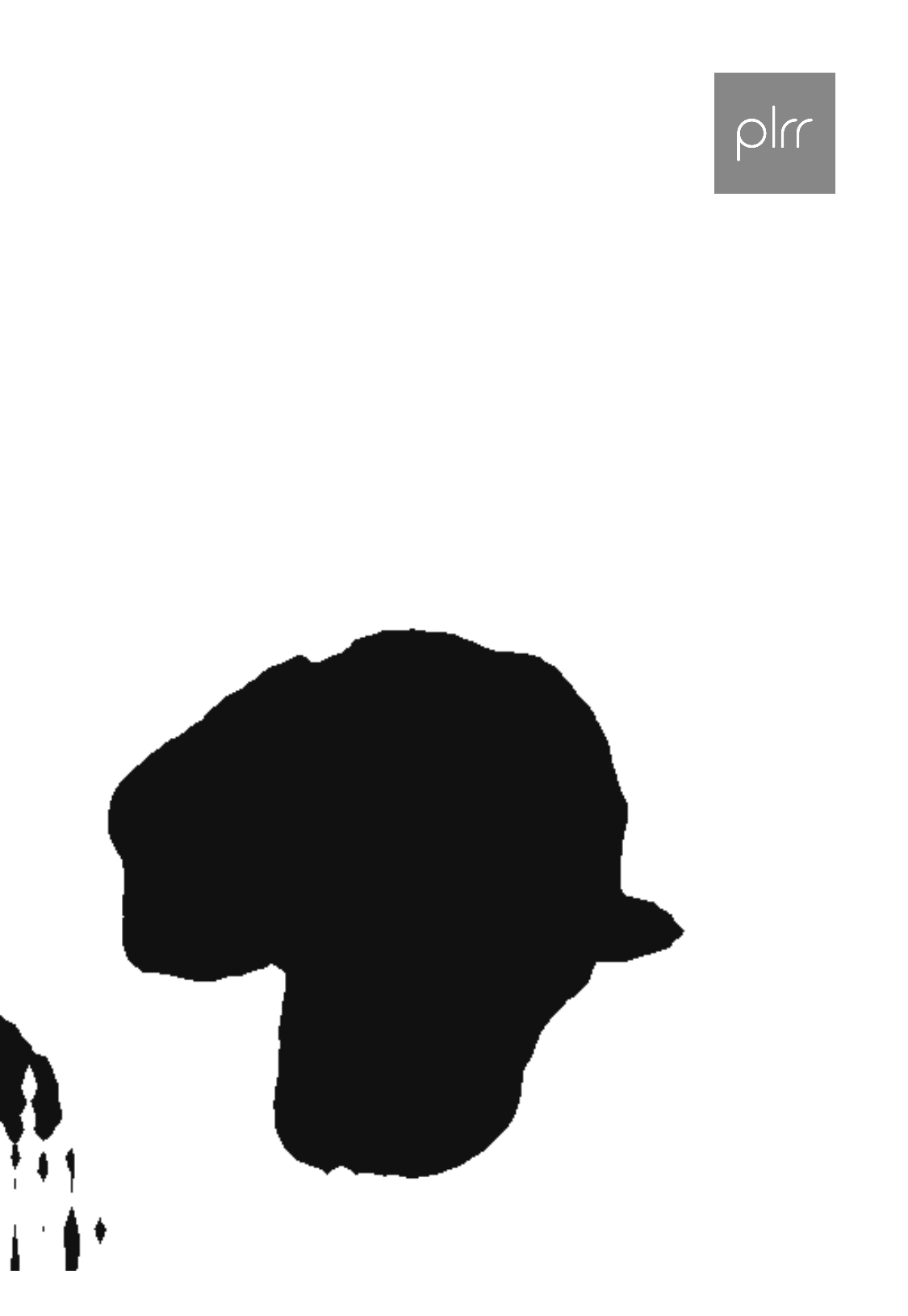

BEFORE:

AFTER:

SegmentationMask:

if let segmentationMaskOfDog = SegmentationMask(on: sourceImage, label: "dog") {

//The segmentation mask of said dog.

}

4.0 Similarity Grouping

Similarity Grouping takes an array of images and outputs an 2 dimensional integer array categorizing possible similarities.

Retrieving the similarity array:

let sourceImages = [UIImage(contentsOfFile: "/1.JPG"), UIImage(contentsOfFile: "/2.JPG"), UIImage(contentsOfFile: "/3.JPG"), UIImage(contentsOfFile: "/4.JPG"), UIImage(contentsOfFile: "/5.JPG"), UIImage(contentsOfFile: "/6.JPG"), UIImage(contentsOfFile: "/7.JPG")]

if let similarities = SimilarityGrouping(on: sourceImages) {

//Example output: [[0], [1], [2], [3], [4], [5, 6]]

}

4.1 Inputs

| Parameters | Type |

|---|---|

on: |

[UIImage] |

4.2 Outputs

| Outputs | Type |

|---|---|

SimilarityGrouping.Output |

[[Int]] |

5.0 Aesthetic Scoring

Useful aesthetic scores can be returned on any image processed.

let sourceImage : UIImage = UIImage(contentsOfFile: "/path/to/image")

if let aestheticScore = AestheticScoring(on: sourceImage) {

//aestheticScore.colorHarmony <== scores can be accessed as so

}

5.1 Inputs

| Parameters | Type |

|---|---|

on: |

UIImage or CVPixelBuffer |

5.2 Outputs

| Output | Type |

|---|---|

.colorHarmony |

Double |

.depthOfField |

Double |

.lighting |

Double |

.Output.vividColor |

Double |

.Output.motionBlur |

Double |

.Output.interestingContent |

Double |

.objectsEmphasis |

Double |

.compositionalBalance |

Double |

.ruleOfThirds |

Double |

.repetition |

Double |

.symmetry |

Double |

6.0 Depth

Passing an image into Depth will result in an image based depth map that can be iterated through to analyze a range of pixel values to manipulate depth with.

let sourceImage : UIImage = UIImage(contentsOfFile: "/path/to/image")

if let depthMap = Depth(on: sourceImage) {

//image

}

6.1 Inputs

| Input | Type |

|---|---|

on: |

UIImage |

6.2 Outputs

| Output | Type |

|---|---|

Depth.Output |

UIImage |

Using the varying grayscale pixels, a depth point cloud is a possible creation using SceneKit.

7.0 Advanced

7.1 Pipelines

let segmentation = ["mask" : Segmentation(label: "person") --> ImageToTexture() --> GaussianBlurTexture(sigma: 3), "image" : ImageToTexture()] --> MaskTexture() --> TextureToImage()